Automatic Music Transcription

Fall 2024 - present

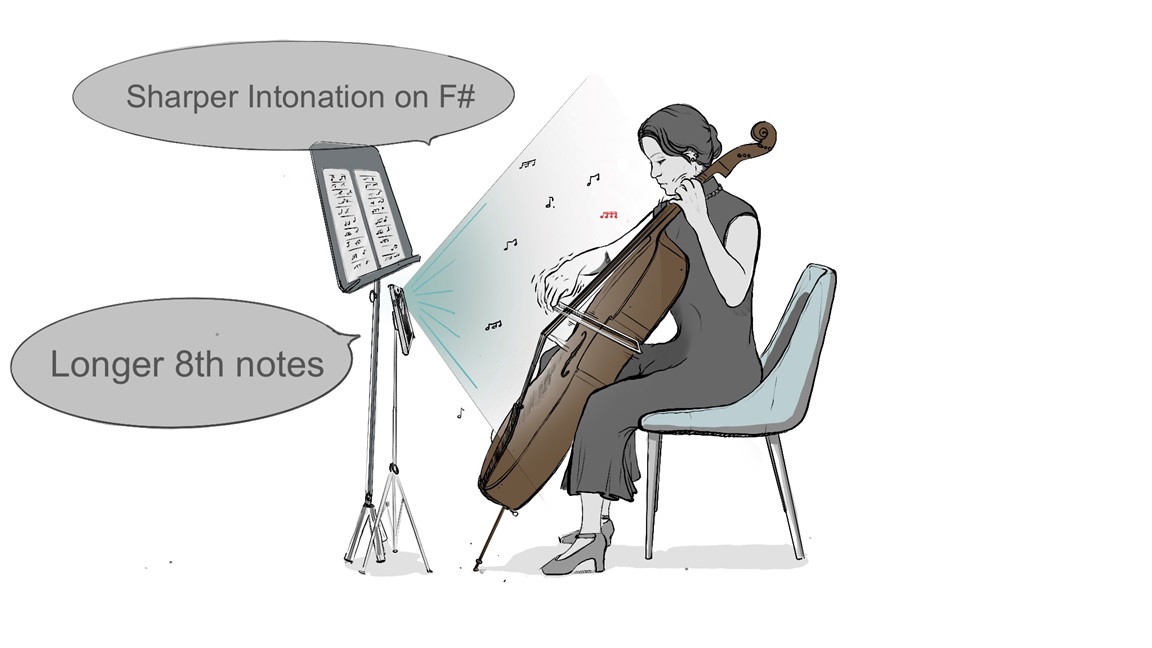

Automatic Music Transcription is a project focused on streamlining audio-to-MIDI transcription for musicians and educators, with applications in isolating sounds in noisy environments. We are conducting a systematic review of AMT models, examining their strengths and limitations with complex, multi-instrument music. We hosted a competition in April 2025, challenging participants to create accurate transcription models for classical music. Currently, we are building our own interpretable AMT model, as well as focusing on other niches such as using computer vision to generate guitar tablature and quantifying a music piece's "complexity" as inputs for future AMT models.