Automatic Music Transcription

Fall 2024 - present

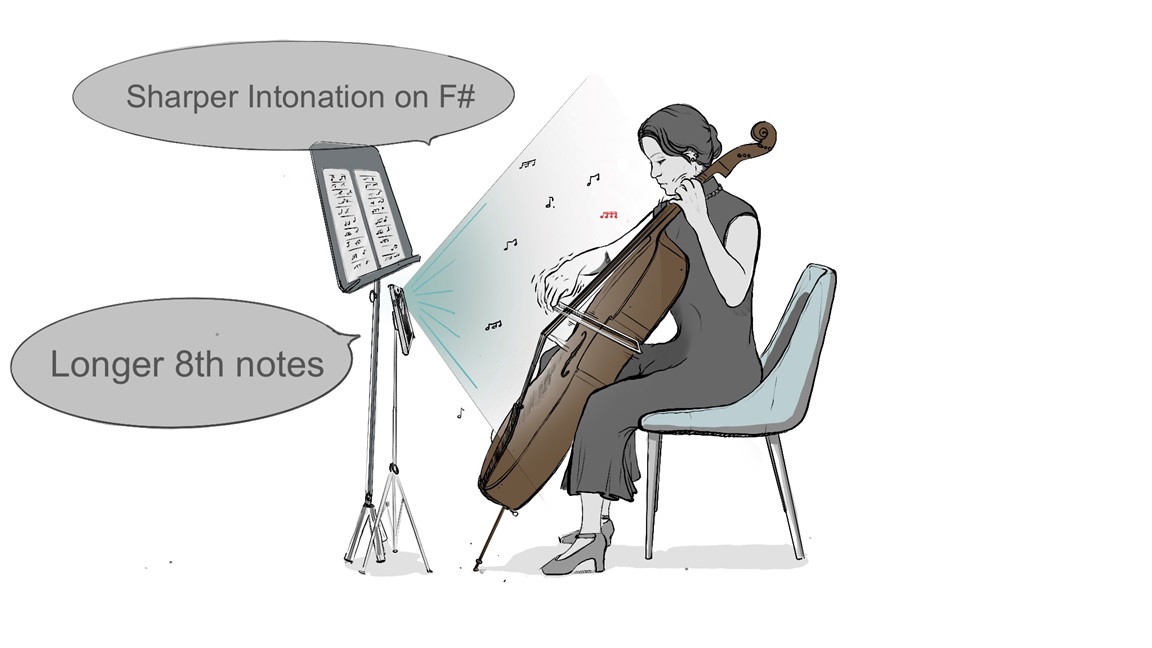

Automatic Music Transcription is a project focused on streamlining audio-to-MIDI transcription for musicians and educators, with applications in isolating sounds in noisy environments. We are conducting a systematic review of AMT models, examining their strengths and limitations with complex, multi-instrument music. To drive innovation, we're hosting a competition in April 2025, challenging participants to create accurate transcription models for classical music. This project aims to drive advancements in AMT and refine current transcription methods.